Loveless Digitalization - Why do we have to choose between security & comfort?

In the "Loveless Digitalization" series I briefly present a case of digital transformation that fails to deliver on the potential of possibilities and outline how a better product or process could look like.

The problem:

Cybersecurity is a complex challenge for organizations and individuals ever since digital products and services where created. That we use more and more complex tools in almost every aspect of our lives makes cybersecurity all the more difficult and important. New features and posibilities also open up new ways for malicious actors to exploit vulnerabilities. Coupled with the fact that the burden for securing digital products and services is often delegated to a secondary market - e.g. to develop firewalls and anti-virus software - or to users - e.g. by making them responsible for updating their devices and saving backups of their data - hasn't helped the situation as countless statistics on the rise of cyberattacks and their success rate prove, see e.g. here.

It is only natural then, that digital service providers are thinking about how to make their users feel safer in the digital world. But too often, measures that are introduced to protect are designed in a way that forces a false dichotomy onto users: choosing between security and comfort.

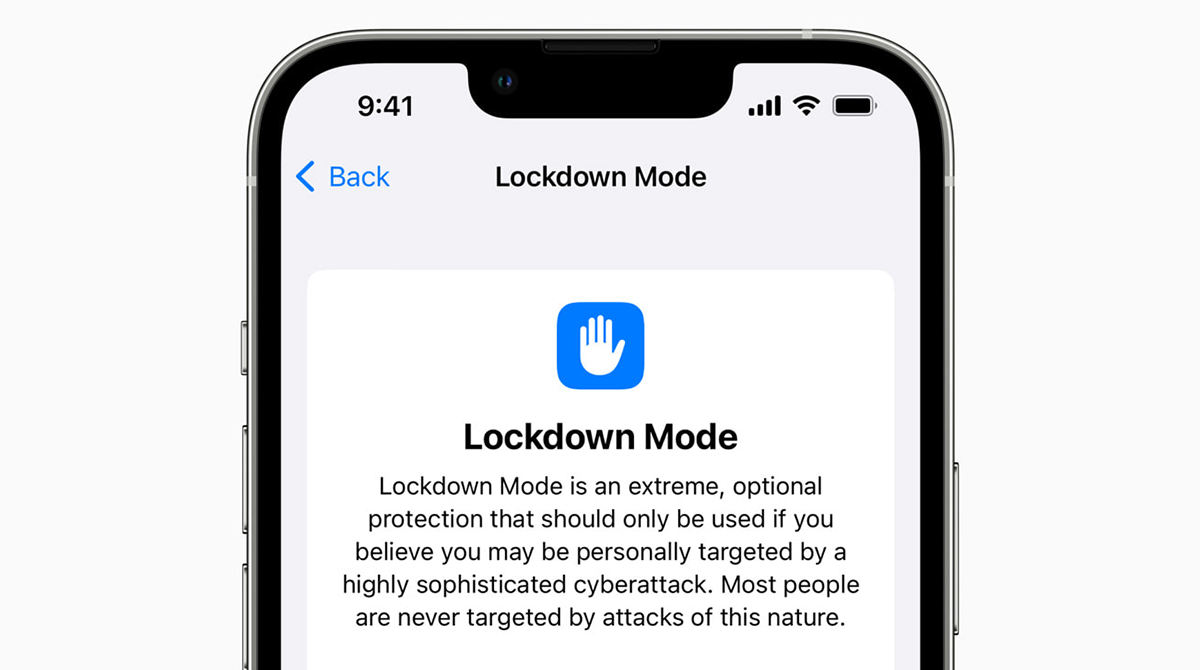

One example is the Lockdown Mode on iOS devices that is explicitly designed to protect those devices from even sophisticated attacks. A key element is turning off certain features to close of vulnerabilities, leading to a limited user experience. But there are also other security features in other environments that help draw attention to my point above. Security features, like blocking certain software and features or notifying the user about risks are often poorly designed and nudge the user towards unsafe behaviour. You open a browser and receive a security pop-up asking you, whether you want to give the application access to the local network; you download an application, but when opening it nothing happens until you discover the hidden option deep within the system preferences that allows you to open the application etc.

This reinforces the above-mentioned false dichotomy: as a user today you're faced with either accepting a significant cut back in user experience - from missing icons in an app to the point that things simply do not work - or you choose comfort and turn down the dial on cybersecurity measures, leaving you exposed.

A better way:

Ideally, security and comfort wouldn't be opposites but two sides of the same coin: well designed digital products and services. This would require digital service providers to stop outsourcing cybersecurity and delegate it down the chain and instead adopt practices like security-by-design effectively. But even if you maintain that there will always be a trade-off between cybersecurity and comfort, there are still things that can be done.

Software developers should be more deliberate about their choices to ensure that the behaviour of their products isn't raising red flags. Does your software really need a constant internet connection? Why does your mobile game need access to my contacts? Security measures implemented on the level of operating systems have actually helped draw attention to hidden business models and sloppy programming on the side of digital service providers.

But nevertheless also designers of operating systems and security measures need to do better. Pop-ups intended to inform users fail to do just that: poorly written, cryptic or overly generalized information about a supposed risky behaviour is not empowering users to make well-based decisions, it's overwhelming them, increasing the risk that they will turn off cybersecurity measures out of frustration. I'm not suggesting that this is the point, I believe that these security features were well-intentioned. But if that is the case they need to do a better job of actually empowering users to make good decisions about whether to grant access to certain data, allow certain network access etc. This requires being much more transparent and clear in a language that is also understandable to people without a CS major.

So, the next time you're designing a new product or security feature, ask yourself: am I reinforcing a false dichotomy and am I doing my best not to confuse users but ensure that they and their data stay safe?