White Mirror - Artificial Intentions

In the "White Mirror" series I talk about positive ideas for using digital technologies in a way that can improve our lives. The title is a reaction to the popular "Black Mirror" series of often dystopic vision of our digital future. While highlighting potential dangers is important, shining a light on the positive potential of digital technologies seems to me at least as important.

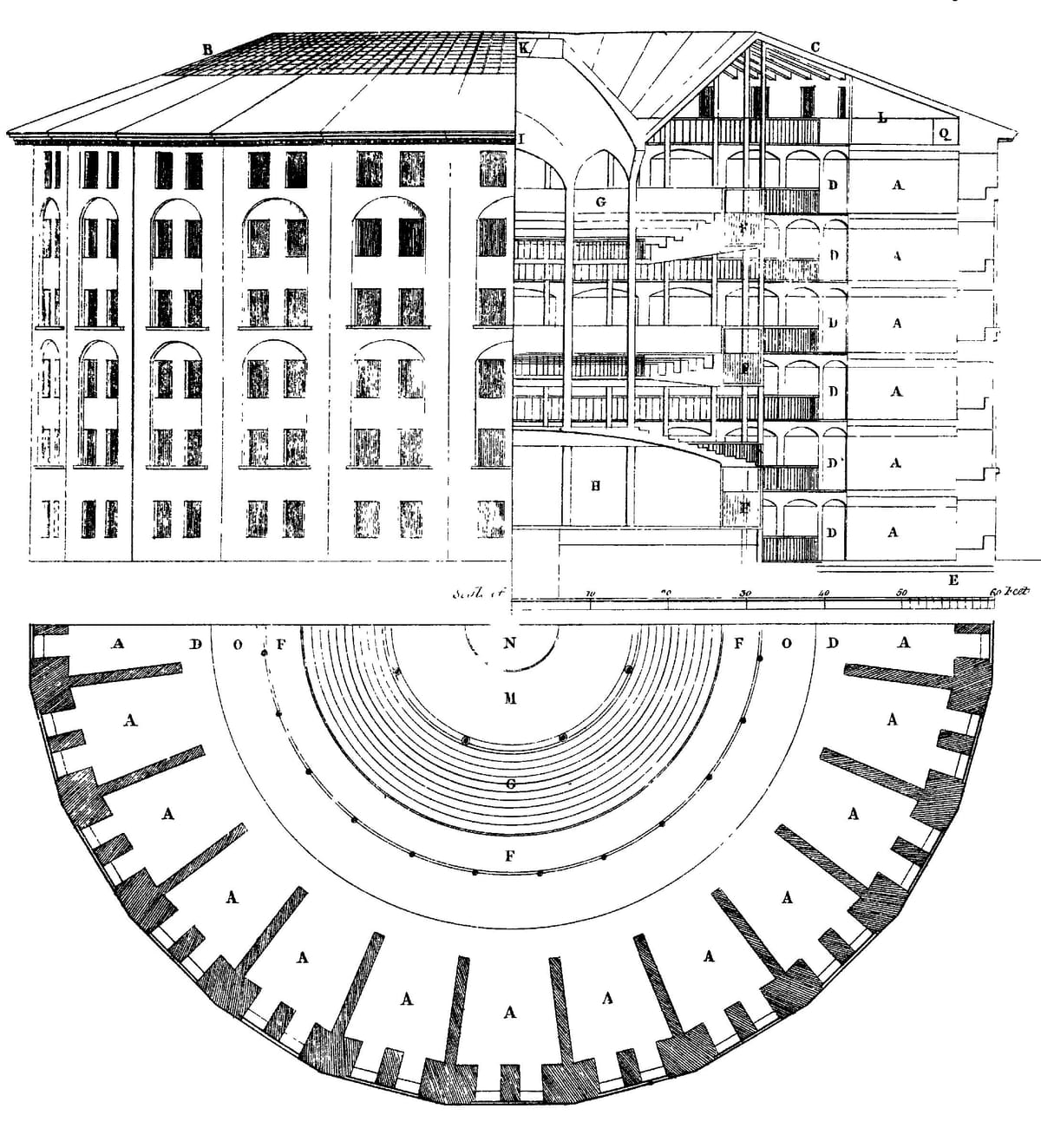

Measuring and controlling behaviour of individuals is a longstanding topic not just for technology but also philosophy. Countless thought experiments and concepts have been developed, chief among them Jeremy Bentham's Panopticon. From a single point, a guard would be able to obserce the behavior of inmates in a prison. The knowledge that you are potentially being watched all the time influences the behavior of inmates even if the guard is currently not watching them.

Digital technologies have taken this idea of constant surveillance out of the prison context and allowed authorities and companies to apply the same logic to our everyday lives. While authorities are more concerned with security and ensuring compliance with certains rules, companies are interested in gathering as much information about us to infer our intentions and wishes. But there is another way!

The issue

The digital advertising market has been rapidly growing ever since the start of the internet. A key feature is the promise to get higher conversion rates because the intentions of customers are better known, reducing adverstising waste. On a traditional billboard or an avertisement in a newspaper, you can't really influence who sees your advertisement. But in the digital world - the promise goes - you can show it only to people who might actually be interested.

Of course, this promise rests on the idea, that you can collect information about indivudals at scale and analyze it for advertising purposes. This information collection and analysis is not only possible thanks to digital technologies, it has become the dominant business model for many technology companies. Hence, the quip: if a digital service is free, you are paying with your data.

This increasing surveillance has garnered mainstream awareness with the rise of social media. But artificial intelligence is making matters worse: as people open up about their most intimate beliefs, questions, and desires to chatbots, AI companies are pivoting towards the advertising.

The almost inescapable surveillance for the purpose of advertising is raising concerns with customers and policy-makers around the globe, and rightly so. Leaving aside the unansered question whether advertising based on surveillance acutally delivers on its lofty promises, it is clear that this level of surveillance infringes on privacy, a fundamental right in liberal democracies.

The idea

Let's challenge the assumption that our desires can be inferred from watching and surveilling us just a bit more. Let's flip the script and give back control and ownership to the individual. Instead of looking at us constantly, analysing our every move and click just for the hope of increasing our probability on clicking on an online advertisement, let's give the individuals back the power to say what they want!

Thanks to digital technologies we can easily imagine a profile, controlled by the individual and reflecting common choices relevant for online advertisement and online shopping. My favorite food? My shoesize? My hobbies? I can define them myself in my profile that I can modify at any point. When I open an online store, the provider no longer needs to pay a data broker to figure out what I want. My profile tells them so directly.

Such a profile would still allow for advertising but it would do so without the need to sacrifice the fundamental right of privacy and it would get rid of data brokers as the current middle man. I get to define my own wishes instead of having them inferred by a third party through constant surveillance. Policymakers and the industry could come together to define a data model and an open standard that allows for the easy implementation of such a profile. Let's get started!